Member-only story

Processing a Large Log File of ~10GB Using Java Parallel Streams

Understanding the Problem

You have a 10 GB bank transaction log file that contains records of individual transactions. Your task is to process the file, filter out transactions where the amount is higher than 10,000, and then sum up the amounts. Since the file is large, the goal is to process it efficiently using parallelism to speed up the computation.

Parallel Streams Approach

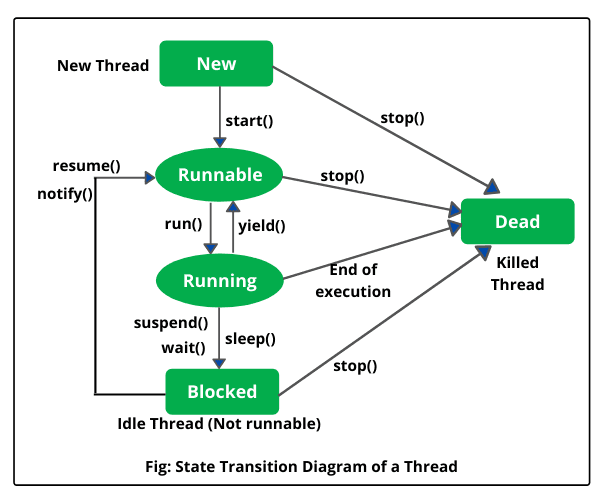

In Java, the Stream API allows for both sequential and parallel processing of data. When using parallel streams, Java will split the data into multiple parts and process them simultaneously on different threads, utilizing multiple cores of the CPU. This approach is particularly useful for large datasets where processing time can be reduced by dividing the work.

How Parallel Streams Work

- Splitting the Data: When you use a parallel stream, Java will automatically partition the data into chunks that can be processed independently. These partitions are processed on multiple CPU cores.

- Parallel Processing: Each chunk of…